Why intelligent machines also need smart policies

This blog was written by Nicolas Zahn, Former Consultant, Digital Government Team at the OECD

At a recent OECD Conference, speakers from various backgrounds joined forces to discuss what role public policy plays in supporting the development and implementation of Artificial Intelligence (AI) to benefit societies. Although we are standing at the beginning of a transformation, AI already has an impact on our everyday lives and hence complex questions arise from data governance over labour market regulation to education. Consequently, smart policies are needed to make the most of intelligent machines.

In late October stakeholders from the public and private sector as well as from civil society and academia came together at the Conference “Artificial Intelligence: Intelligent Machines, Smart Policies” organized as part of the OECD Going Digital project. Given that aspects of Artificial Intelligence (AI) already have considerable impact on our everyday lives – from online search results and hiring decisions to public health and public transportation – the conference asked, what sort of policy and institutional frameworks should guide AI design and use and how can we ensure that AI benefits society as a whole?

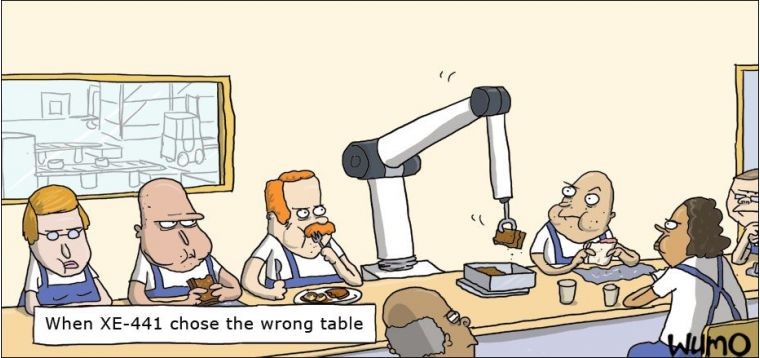

Right from the beginning of the conference, which started with an overview on the current state of Ai research based on the OECD Digital Economy Outlook, speakers made clear that AI potentially affects all policy fields from education to the labour market. The public discussion focuses on a scenario where AI will replace not only low-skilled labour but also knowledge workers. Garry Kasparov, who gave the opening remarks, noted that he was probably the first knowledge worker whose job was being taken by AI. However, speakers from academia and the private sector were keen to highlight that we still stand at the beginning of AIs potential and that at this stage, AI is rather used to augment humans and their work instead of replacing them at it. Hence, the popular narrative of “robots taking away our jobs” should be taken with a grain of salt. The impact of AI on labour markets merits further study to support evidence-based policymaking. However, current statistics offer support for both optimistic and pessimistic scenarios. Public policy will be relevant here as it can help to shape what role work will have in the future of our societies. Education plays a key role here as well with immediate need for action given that adjustments to education systems take a long time to come into effect compared to the technological progress. But what scenario to follow, when the future of AI is still uncertain?

While several societal beneficial use cases of AI were presented, researchers conceded that a variety of issues, such as lack of transparency of algorithms and bias, pose problems and should also be addressed from a policy but also from a design perspective. While policymakers need to adjust the frameworks guiding the use of AI creators of AI need to adjust their thinking about the design of AI. At the moment, AI is designed so as to optimize a given objective. However, what we should be focusing on is designing AI that delivers results that are in line with peoples’ wellbeing. By observing human reactions to various outcomes, AI could learn through a technique called cooperative inverse reinforcement learning what our preferences are and then work towards producing results consistent with those preferences.

The conference also showed that knowledge about and willingness to use AI is unevenly distributed across the globe and is determined not just by resources but also cultural factors. Some governments have pushed for R&D in this area more than others. Particularly Asian as well as Nordic and Baltic states presented their current situation as well as their visions on the future place of AI in society. The common theme was that AI will play a major role in all aspects of life and public policy. But while country presentations were focusing on visions of the future, current use cases from the industry showed that the initial forms of AI today still struggle with very practical problems, from understanding human input for software to making sense of the environment for autonomous transportation. Representatives of the private sector hence cautioned policymakers to create policies today for science-fiction when they should focus on what the current technology actually is capable of doing. Particularly when talking about safety, security and privacy speakers noted that existing policy framework contain concepts that are still relevant but the frameworks will need to be adapted to ensure AI will benefit societies. Concrete proposals were presented by various speakers, e.g. the AI principles of the Future of Life Institute.

Given the unequal distribution of AI know-how but the potential for easy spreading of the technology around the globe and the important role in this field of multinational companies, speakers re-affirmed the importance of a global dialogue on AI governance before and during the conference. Fo a such as the G7 but also the OECD were stated as very valuable focal points in facilitating such a global dialogue and providing a space for evidenced-based policymaking and exchange of knowledge on concrete examples and practices.

After a tour d’horizon across several policy fields from education and labour markets to liability and competitive law the conference concluded with an astonishing amount of consensus regarding the next step: the global dialogue on smart policies, i.e. policies that manage to balance fostering innovation with safeguarding rights. Such a dialogue should be followed up not only by talk but also action. The coming years represent a critical window of opportunity for policymaking in the field of AI before technological progress locks-in development trajectories, makes governance harder or creates negative side effects. Upcoming policy dialogue should focus on creating human-centric AI and be conducted following the multi-stakeholder approach. While no one-size-fits-all approach exists and country specific elements need to be taken into consideration, important questions around the globe relate to transparency and accountability, data privacy, dealing with bias and ethics. The OECD Going Digital project will continue to support stakeholders in this discussion with additional publications in the various fields affected by AI. The work done by the Observatory on Public Sector Innovation as well as the team on Digital Government will be a primary contributor to the horizontal Going Digital project and support policymakers through reports, recommendations and workshops.