Call for Comments: Artificial Intelligence (AI) Primer

Announcement: Public consultation has now been extended to 15 September 2019.

Update: The final version of the AI primer has been published.

As we mentioned in a blog a few months ago, OPSI has been working to develop a “primer” on AI to help public leaders and civil servants navigate the challenges and opportunities associated with the technology to understand how it may help them achieve their missions.

Today, we are excited to launch a public consultation on our initial draft of the primer, which we have tentatively titled Hello, World: Artificial Intelligence and its Use in the Public Sector. “Hello, World!” is often the very first computer program written by someone learning how to code, and we want this primer to be able to help pubic officials take their first steps in exploring AI. This is the second in a series of primer on topics of interest for the innovation community, following on from the June 2018 Blockchains Unchained.

The AI primer has three purposes:

- Help governments understand the definitions and context for AI some technical underpinnings and approaches.

- Explore how governments and their partners are developing strategies for and using AI for public good.

- Understand what implications public leaders and civil servants need to consider when exploring AI.

To make sure we get these right, we need your input. Are you interested in the potential for AI to transform government? Do you have knowledge or expertise in the field of AI that you would like to contribute? Are you skeptical about AI in government? We are interested in hearing your thoughts and feedback on the draft.

Share your comments, feedback, and contributions by 15 September 2019.

You can contribute in three ways:

- Adding comments to a collaborative Google Doc.

- Adding comments and edits (in tracked changes) directly to a .doc version and e-mailing it to us at [email protected]. A PDF version is also available.

- Leaving comments at the end of this blog post.

OPSI is open to all types of constructive feedback through the consultation, including:

- Does the report strike the right balance between technically sound yet accessible for civil servants?

- Are there any gaps, inaccurate statements, or missed opportunities? For instance, there is some debate on whether rules-based approaches should be considered as being truly AI. Did we address this appropriately?

- Are there additional examples, tools, resources, or guidance that civil servants should be aware of?

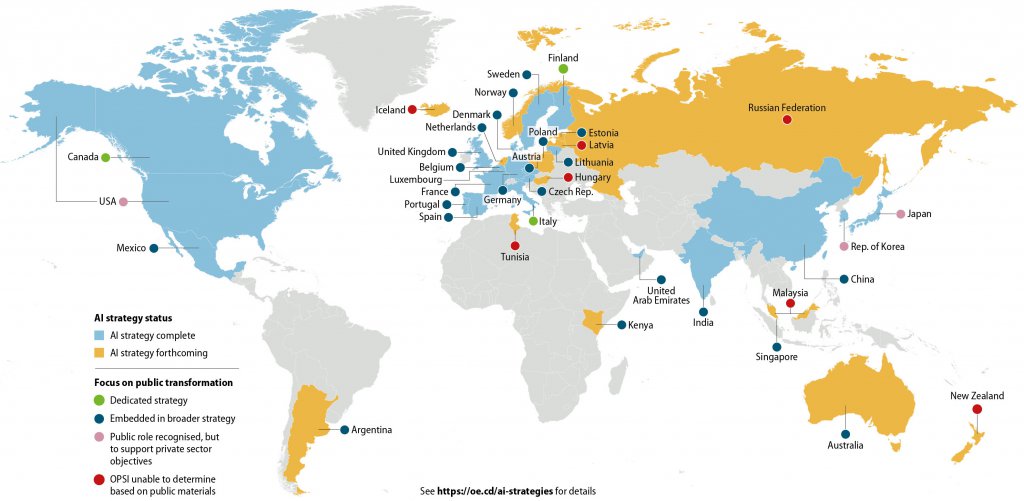

As a companion piece for the draft primer, we have also developed an AI Strategies & Public Sector Components page, which discusses each country’s complete or forthcoming national AI strategy, or comparable guiding policies that sets forth their strategic vision and approach to AI. This includes a focus on the extent to which each specifically addresses public sector innovation and transformation. The site also includes links to the key strategy and policy documents.

AI is also an issue gaining traction within the OECD, where horizontal teams have integrated AI as part of the Going Digital initiative and have published AI Principles and an OECD Recommendation. In addition, our colleagues from the Digital Economic Policy Division recently published the Artificial Intelligence in Society report, and colleagues in the Digital Government team and OECD Working Party of Senior Digital Government Officials (E-Leaders) are drafting a working paper on state of the art uses of different kinds of emerging tech (including AI) in governments. OPSI’s AI primer seeks to complement the great work of these teams.

We look forward to hearing your thoughts on the draft and hope that you can help us ensure that this is a helpful tool for helping public servants to better understand AI and how it can be used in government as well as the associated challenges and implications.

Findings from the Primer

AI has a long history and its definition and purpose are context-specific

Although AI has been a hot topic in recent years, it has been researched and discussed for over 70 years. Over that time, there have been several cycles where expectations soared, but then people turned away after the tech failed to live up to its hype. There is no uniformly accepted definition of AI because it means different things to different people, including those of us in the public sector. The primer seeks to provide civil servants with a knowledge base about the history of AI, what it can mean for government, and where it may be going in the future.

AI is technically complex, but civil servants need to know the basics

At a technical level, while there are a variety of forms of AI, all AI today can be classified as “narrow AI”. In other words, it can be leveraged for specific tasks for which computers are well suited, such as understanding text, classifying objects, and understanding spoken language. Machine learning approaches such as “unsupervised learning”, “supervised learning”, “reinforcement learning”, and “deep learning”, hold significant potential for a variety of tasks, yet each has its own strengths and limitations. While complex, each of these can be broken down into building blocks. The primer seeks to explain them in a way that provides civil servants with essential details, but doesn’t weigh them down with a level of technical detail that most won’t need.

Governments hold a special role in the AI ecosystem, and they are taking action

AI holds great promise for the public sector and places governments in a unique position. They are charged with setting national priorities, investments and regulations for AI, but are also in a position to leverage its immense power to innovate and transform the public sector, redefining the ways in which it designs and implements policies and services. OPSI has done an initial mapping and has identified 38 countries (including the EU) that have launched, or have plans to launch, AI strategies. Of these, 28 have (or plan to have) a strategy focus specifically on public sector AI. Many governments have also launched real-world projects that use AI to improve government in many ways, as discussed throughout the report.

While the potential is great, so are the considerations governments must make

Through research and interviews, a number of key considerations have risen to the surface. As discussed in the primer, they must:

- Provide support and a clear direction but leave space for flexibility and experimentation.

- Develop a trustworthy, fair and accountable approach to using AI.

- Secure ethical access to, and use of, quality data.

- Ensure government has access to internal and external capability and capacity.

The volume of considerations that civil servants must take into account may seem overwhelming. However, governments have devised approaches to addressing them. This guide discusses these approaches, and the potential exists for some to be adapted for use in other governments and contexts.

AI can support all facets of innovation

As OPSI’s work has shown over the last few years, innovation is not just one thing. Innovation takes different forms, and each should be considered and appreciated in the public sector. OPSI has identified four primary facets to public sector innovation:

- Mission-oriented innovation sets a clear outcome and overarching objective for achieving a specific mission.

- Enhancement-oriented innovation upgrades practices, achieves efficiencies and better results, and builds on existing structures.

- Adaptive innovation tests and tries new approaches in order to respond to a changing operating environment.

- Anticipatory innovation explores and engages with emergent issues that might shape future priorities and future commitments.

AI is exciting because it is a general purpose technology with the potential cut across and touch on the multiple facets of innovation. For instance, global leaders already have strategies in place to build AI capacity as a national priority (mission-oriented). AI can be used to make existing processes more efficient and accurate (enhancement-oriented). It can be used to consume unstructured information, such as tweets, to understand citizen opinions (adaptive). Finally, in looking to the future, it will be important to consider and prepare for the implications of AI on society, work, and human purpose (anticipatory).

We want your feedback

We need your thoughts in order to ensure this prime is useful to civil servants. Please submit all comments, feedback, and contributions by 15 September 2019. You can do this in three ways:

- Adding comments to a collaborative Google Doc.

- Adding comments and edits (in tracked changes) directly to a .doc version and e-mailing it to us at [email protected]. A PDF version is also available.

- Leaving comments at the end of this blog post.